AI INFRASTRUCTURE ALLIANCE

Building the Canonical Stack for Machine Learning

Our Work

At the AI Infrastructure Alliance, we’re dedicated to bringing together the essential building blocks for the Artificial Intelligence applications of today and tomorrow.

Right now, we’re seeing the evolution of a Canonical Stack (CS) for machine learning. It’s coming together through the efforts of many different people, projects and organizations. No one group can do it alone. That’s why we’ve created the Alliance to act as a focal point that brings together many different groups in one place.

The Alliance and its members bring striking clarity to this quickly developing field by highlighting the strongest platforms and showing how different components of a complete enterprise machine learning stack can and should interoperate. We deliver essential reports and research, virtual events packed with fantastic speakers and visual graphics that make sense of an ever-changing landscape.

Download the Enterprise Generative AI Adoption Report

Oct 2023

Our biggest report of the year covers the wide world of agents, large language models and smart apps. This massive guide dives deep into the next-gen emerging stack of AI, prompt engineering, open source and closed source generative models, common app design patterns, legal challenges, LLM logic and reasoning and more.

Get it now. FREE.

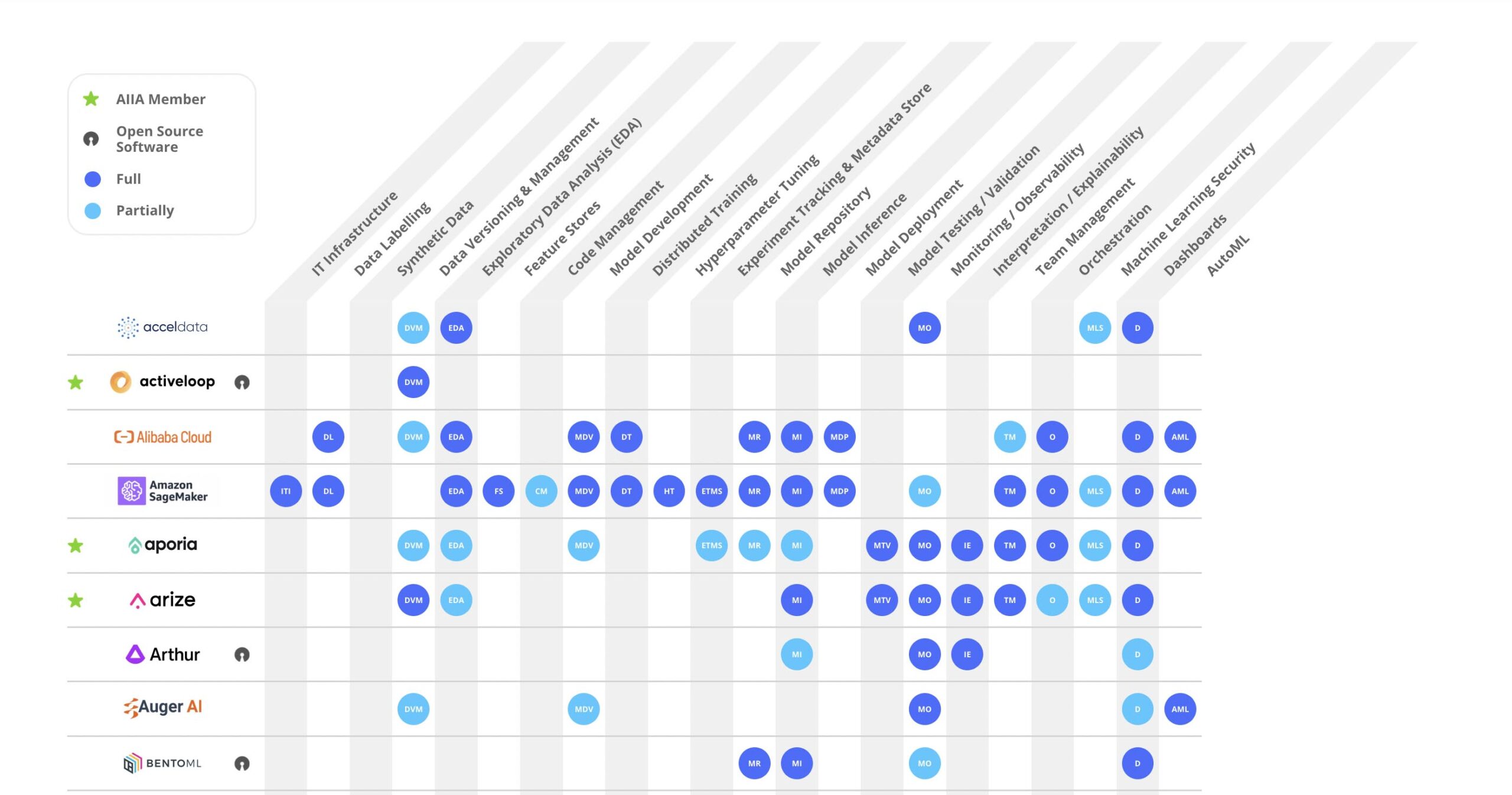

AI Landscape

Check out our constantly updated AI Landscape Graphic that shows the full range of capabilities for major MLOps tools instead of just pigeonholing them into a single box that highlights only one aspect of their primary characteristics.

Today’s MLOps tooling offers a broad sweep of possibilities for data engineering and data science teams. You can’t easily see those capabilities in typical graphics that show a bunch of logos so we’ve engineered a better info-graphic to let you quickly figure out if a tool does what you need now.

Events – Past and Future

Check here for our upcoming events and to watch videos from past events. We put on 3 to 4 major events every year and they’re packed with fantastic speakers from across the AI/ML ecosystem.

MEMBERS

ARTICLES

Debugging Python-Based Microservices Running on a Remote Kubernetes Cluster

At Modzy we’ve developed a microservices based model operations platform that accelerates the deployment, integration, and governance of production-ready AI. Modzy is built on top of Kubernetes, which we selected for its scheduling, scalability, fault tolerance, and...

Building your MLOps roadmap

As more companies wade into the AI waters and begin taking the first steps to operationalize models, they reach the point where they need to do machine learning at scale. This means scaling up your model operations. And it’s what MLOps is all about. But how do you...

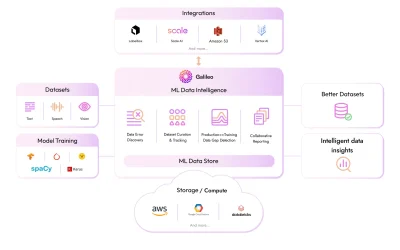

Introducing ML Data Intelligence For Unstructured Data

“The data I work with is always clean, error free, with no hidden biases” said no one that has ever worked on training and productionizing ML models. Uncovering data errors is messy, ad-hoc and as one of our customers put it, ‘soul-sucking but critical work to build...

The MLOps Stack is Missing A Layer

Deploying ML at scale remains a huge challenge. A key reason: the current technology stack is aimed at streamlining the logistics of ML processes, but misses the importance of model quality. Machine Learning (ML) increasingly drives business-critical applications...

Test your data quality in minutes with PipeRider

tl;dr If you missed out on PipeRider’s initial release, then now is a great time to take it for a spin. Data reliability just got even more reliable with better dbt integration, data assertion recommendations, and reporting enhancements. PipeRider is open-source and...

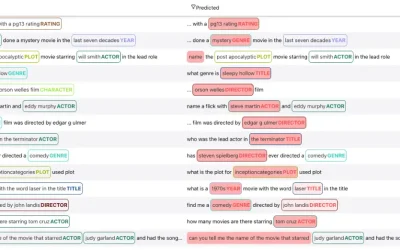

Improving Your ML Datasets, Part 2: NER

In our first post, we dug into 20 Newsgroups, a standard dataset for text classification. We uncovered numerous errors and garbage samples, cleaned about 6.5% of the dataset, and improved validation by 7.24 point F1-score. In this blog, we look at a new task: Named...

Connect with Us

Follow US