“The data I work with is always clean, error free, with no hidden biases”

said no one that has ever worked on training and productionizing ML models. Uncovering data errors is messy, ad-hoc and as one of our customers put it, ‘soul-sucking but critical work to build better models’.

We learned this the hard way as we trained and deployed thousands of ML models into production at Google and Uber AI over the past decade.

We realized that the ‘ML data problems’ of constantly ensuring data robustness (fewer errors / biases) and representativeness (relevant to the ever-changing world) were the most time-consuming yet highest ROI metrics to ensure highly performant models.

MACHINE LEARNING IS LARGELY A MESSY DATA PROBLEM

It is common knowledge that improving the quality of your data often leads to larger gains in model performance than tuning your model. Data errors can creep into your datasets and cause catastrophic repercussions in many ways:

Sampling Errors

Data curation is not as straightforward as selecting equal amounts of data for each prediction class. Data scientists often consider various factors such as trustworthy data sources, broad feature representation, data cleanliness, data generalizability and more. Such complexities often result in overlooked aspects of the data, invariably causing model blindspots.

Labeling Errors

Labels are often human curated or synthetically assigned to every data sample for supervised / semi-supervised training. In either case there is always a margin for error. Whether it comes from the negligence of the human-in-the-loop or missed edge cases in labeling algorithms. Such errors, even in small numbers, can unknowingly cause mispredictions on a larger-than-expected slice of production traffic.

Data Freshness

Labeled datasets are often reused over long periods of time due to the high cost of labeling. Moreover, deployed models are seldom retrained on the right fresh data regularly. This leads to data staleness, ML models serving new and unseen traffic in production, mispredictions, and ML teams acting reactively to customer complaints.

THE ML DATA PROBLEM LACKS PURPOSE-BUILT TOOLING

If you ever look at an ML developer’s monitor, you likely would not see a screen filled with model monitoring dashboards or implementations of model algorithms, rather python scripts and excel sheets – why? They are constantly trying to dig into the data that powers their models to answer questions and fix errors, all in service of improving the quality of their model’s predictions.

There is a large gap in ML tooling to provide ML developers with the superpowers they need to find, fix, track, and share ML data errors quickly. As unstructured data in the enterprise continues to grow exponentially, the ML data problem is the largest impediment to meeting the demand for rapid enterprise ML – python scripts and excel sheets will not cut it.

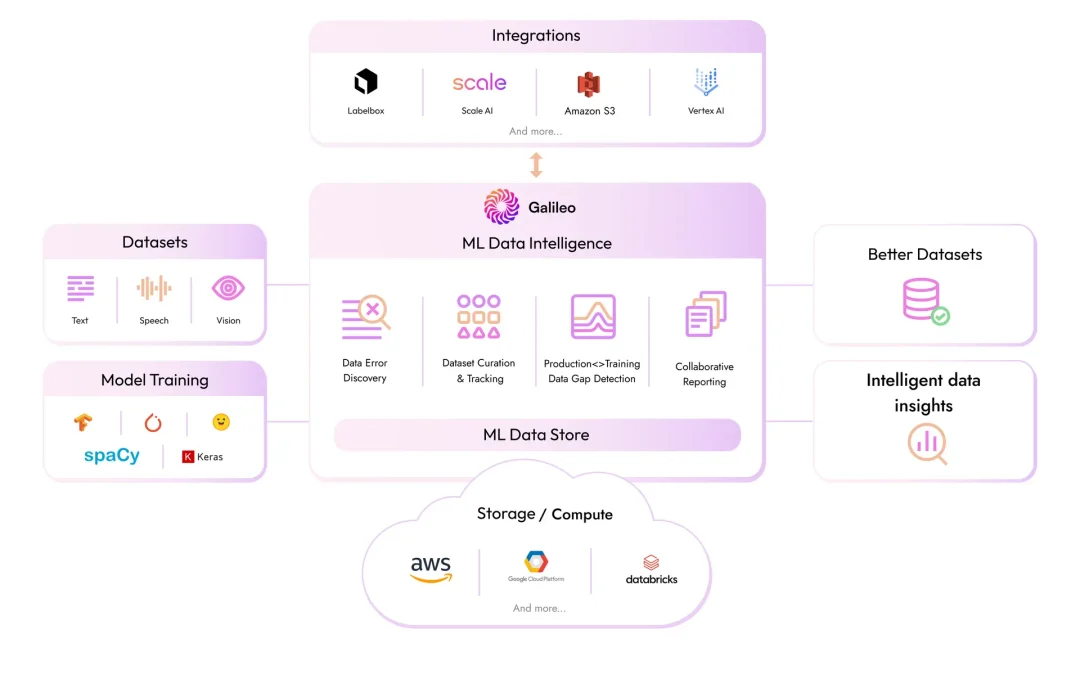

GALILEO: ML DATA INTELLIGENCE TO SOLVE THE ML DATA PROBLEM

One year ago, we set out to build Galileo to provide the insights and answers necessary to rapidly identify and fix crucial data blindspots to better model predictions in production – a data-scientist’s assistant as they go through the constant iterative process of improving their model’s performance.

We call this ML Data Intelligence. Doing ML effectively takes a village, and we built Galileo to impact everyone in the ML workflow –

-

from the BD/Sales engineer who got a crappy data dump from a customer and is now manually finding data errors before sending it to downstream teams of analysts and data scientists

-

to the data scientist who is both training models and fixing data between iterations while simultaneously attempting active learning on models in production

-

to the Subject Matter Experts (SMEs) who want to quickly surface data errors and ambiguity to provide expert opinions and best next steps for data scientists – Does the data abide by certain rules or industry protocols? Do we need more data of a certain type? Do we need to change the labels we are working with? Should we remove a certain type of data since it seems to be biasing the model?

-

to the PM and engineering leaders who must track ROI on their data procurement, annotation costs, and product KPIs

Galileo provides ML teams with an intelligent ML data bench to collaboratively improve data quality across their model workflows – from pre-training, to post-production.

At Galileo, we are only a year into addressing this massive problem, and have been excited to be working with a host of ML teams across F500 enterprises and high-growth startups, helping them become better, faster, and more cost-effective with their ML data.

If you are interested in using Galileo, please reach out. We would love to hear from you.

Join our Slack Channel to follow our product updates and be a part of the ML Data Intelligence community!

Thanks for reading,

This blog has been republished by AIIA. To view the original article, please click HERE.

Recent Comments