ARTICLES

Enabling AI Regulation Compliance for Enterprises

Learn about new government regulations for safer, more trustworthy AI products – and how we're addressing them As generative AI continues to make headlines, international efforts are underway to both harness the promise and address the risks of artificial...

Saving and Restoring Machine Learning Models with W&B

Introduction In this notebook, we'll look at how to save and restore your machine learning models with Weights & Biases. W&B lets you save everything you need to reproduce your models - weights, architecture, predictions, and code to a safe place. This is...

Olivio Sarikas – How AI Will Redefine Culture and Entertainment

Podcaster and Vlogger Olivio Sarakis delivers a terrific talk on how AI will transform culture and entertainment. A dash of art history, a touch of diffusion models and a bit of philsophy make for one of the more unique and intriguing talk at our LLMs and the...

Lilly Chen – Contenda – LLM Valley: A Video Game-Themed Talk Through AI Product Markets and Venture Capital

Lilly Chen of Contenda delivers and fun and funny talk on AI product market fit and venture capital through the lens of an old-school video adventure game.

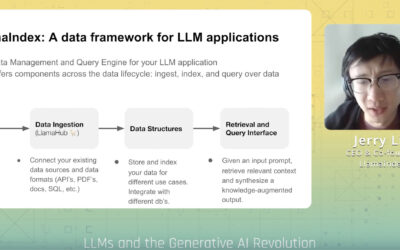

LlamaIndex – Practical Data Considerations for Building Production Ready LLM Apps

LlamaIndex CEO Jerry Liu walks us through the various ways to build production ready LLMs. He walks us through Retrieval Augmented Generation and many of the challenges with getting it right in the real world.

AI Quality Management: Key Processes and Tools, Part 2

Achieving high AI Quality requires the right combination of people, processes, and tools. In the last blog post, we introduced the processes and tools for driving AI Quality in the early stages of model development – data quality assessment, feature development, and...

On AI Ethics: Wendy Foster, Director of Engineering and Data Science at Shopify

Shopify, a leading provider of essential internet infrastructure for commerce, is relied on by millions of merchants worldwide to market and grow their retail businesses. This past Black Friday and Cyber Monday weekend alone, Shopify merchants achieved a...

Choosing the Right Infrastructure for Production ML

Choosing the right infrastructure for your production ML can impact the performance, scalability, cost, and security of models. When it comes to deploying machine learning models in production, choosing the right infrastructure is crucial for ensuring the success of...

7 Recommendations for a Safe Integration & Adoption of Generative AI and LLMs in the Enterprise

Executive Summary Generative AI and LLMs: Unlocking new opportunities for innovation, efficiency, and productivity, while posing potential risks including confidentiality breaches, IP infringements, and data privacy violations. Seven Recommendations: Safely integrate...

Feature Engineering for Recommendation Systems – Part 1

The way ML features are typically written for NLP, vision, and some other domains is very different from the way they are written for recommendation systems. In this series, we’ll examine the most common ways of engineering features for recommendation systems. In the...

Connect with Us

Follow US