by Fennel | Jul 25, 2023 | Uncategorized

The way ML features are typically written for NLP, vision, and some other domains is very different from the way they are written for recommendation systems. In this series, we’ll examine the most common ways of engineering features for recommendation systems. In the...

by Superb AI | Jul 18, 2023 | Uncategorized

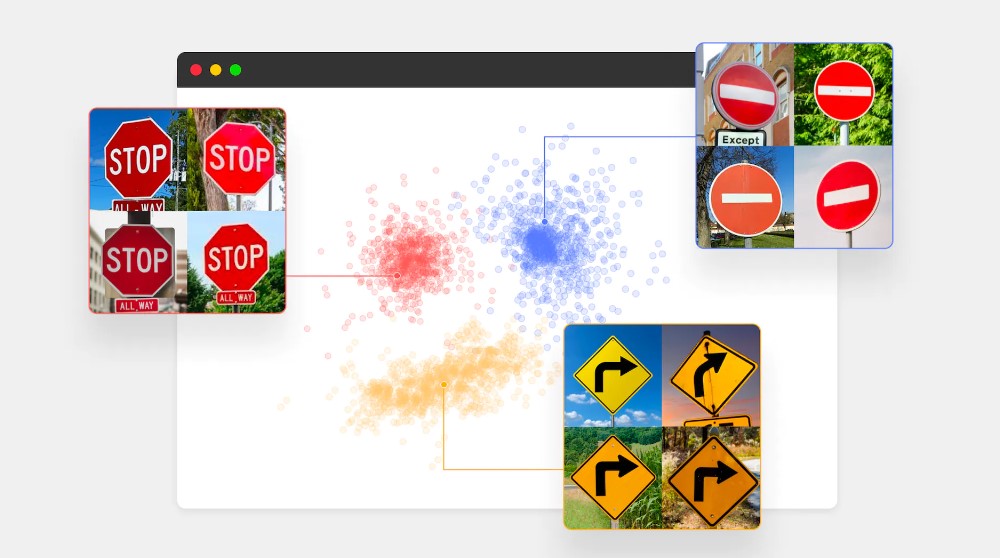

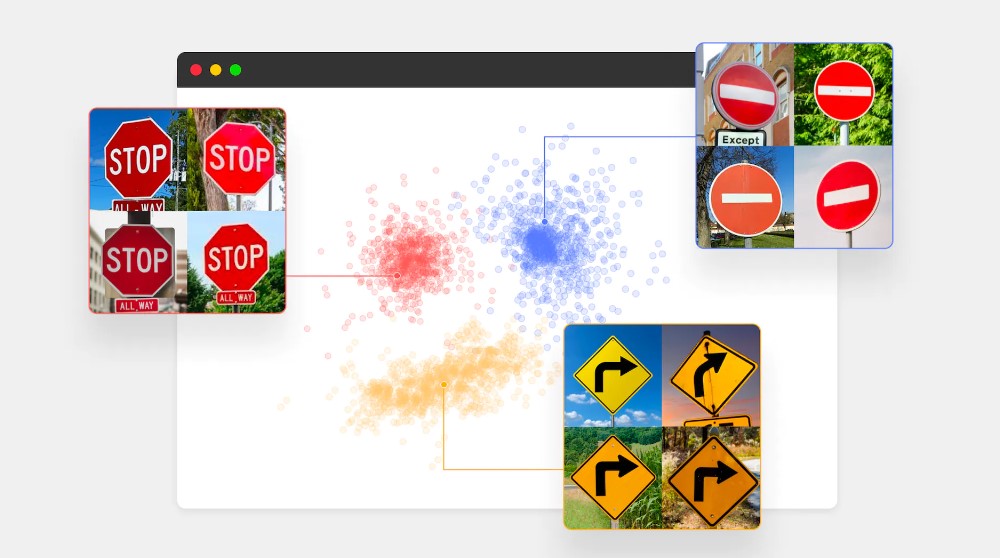

The concept of embedding in machine learning can be traced back to the early 2000s, with the development of techniques such as Principal Component Analysis (PCA) and Multidimensional Scaling (MDS). These methods focused on finding low-dimensional representations of...

by Modzy | Jul 18, 2023 | Uncategorized

Presented by the tinyML Foundation: Running and Managing Fleets of Single Board Computers at Scale. The increase of compute power available on single board computers (SBCs) has opened the door to a whole new class of ML-powered applications that can run on the likes...

by Weights and Biases | Jul 13, 2023 | Uncategorized

In this article, we will take a look at some of the Hugging Face Transformers library features, in order to fine-tune our model on a custom dataset. The Hugging Face library provides easy-to-use APIs to download, train, and infer state-of-the-art pre-trained models...

by TruEra | Jul 11, 2023 | Uncategorized

Achieving high AI Quality requires the right combination of people, processes, and tools. In the post, “What is AI Quality?” we defined what AI Quality is and how it is key to solving critical challenges facing AI today. That is, AI Quality is the set...

by Arize AI | Jul 6, 2023 | Uncategorized

Stefan Kalb is on a mission to eliminate food waste and revolutionize the grocery business. Shelf Engine – the company he co-founded and leads as CEO – has notched an impressive track record since its founding in 2016, diverting over 4.5 million pounds of food waste...

Recent Comments