tl;dr ArtiVC is lightning fast.

We recently released ArtiVC, our centralized file versioning solution specifically designed for large files. After sharing the release on Reddit and other places, one question that kept coming up was “how does ArtiVC perform in benchmarks compared to other solutions?”.

To answer this question, we’ve compared the speed of ArtiVC to 3 other popular tools for data transfer and storage — AWS CLI, Rclone, and DVC. The full benchmark report can be found on the new ArtiVC website, but here we’ve picked out some of the more interesting points from the results and talk about why ArtiVC performs so well.

Query, query, quite contrary

To understand the speed of ArtiVC you also need to understand the how ArtiVC works under the hood. But first let’s look at how DVC, another popular versioning tool, works.

In DVC’s comparison with Rclone, they talked about the “less obvious performance issue” — that is, how to determine which files need to be transferred during an upload or download operation.

DVC listed two methods for making this determination:

- Querying for each individual file

- Querying the full remote listing

Which of these methods will yield the best performance is dependent on the number of files present in local or remote. This is because querying remote storage brings with it the overhead of an API call, which takes times. Knowing this, it’s possible to find the threshold beyond which it optimal to retrieve a full remote listing.

The Third Method

What’s different in ArtiVC?

The centralized nature of ArtiVC means that there is a single source of truth for knowing which files exist in remote, and that is the commit object. The commit object is only uploaded after all of the files have been pushed to remote, so it’s guaranteed to be an accurate list of files that have been added to the repository.

The benefit of of this is that only one API request is required to query remote for the commit object. Local files can then be compared and pushed to remote. There’s no need to query each file, or even query a full remote listing, which may be split over multiple API calls.

Show me the numbers

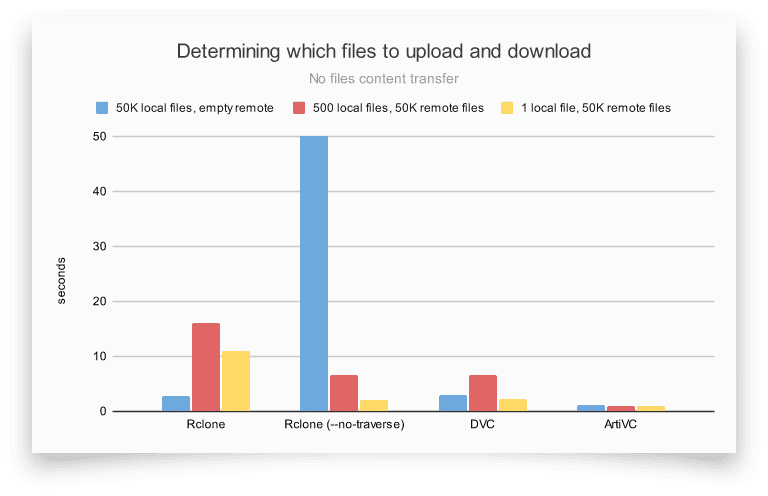

ArtiVC’s method has a huge effect on real world performance. The benchmark below shows the time it takes to determine which files need to be transferred using the dry-run feature of each tool under three scenarios.

- 50000 local files and no remote files

- 500 local files and 50000 remote files

- 1 local file and 50000 remote files

For each scenario, ArtiVC is lightning fast at around 1 second.

Check out the ArtiVC benchmark report for the full results, including benchmarks of transferring large files, and large numbers of files (spoiler: it’s also very fast in each of those scenarios); and for an overview of the repository structure, the How it Works page.

Try for yourself

ArtiVC is a standalone CLI too, no server or additional tools are required to use it.

Install it with Homebrew, or download the latest binary from GitHub.

brew tap infuseai/artivc

brew install artivc

Then head over to the getting started page to get set up. If you like what you see then please consider giving us a star on GitHub.

InfuseAI ❤ Open Source

Open source is coursing through our veins. Check out our other projects:

- PrimeHub: The full MLOps lifecycle in one platform

- PipeRider: Data observability for all your pipelines

- ArtiV: A modern take on version control for large files

- colab-xterm: How about a full TTY terminal in Google Colab’s free tier? Yes, please.

Don’t forget to join our machine learning community, follow us online here:

- Join the InfuseAI Discord to chat about data science and MLOps topics

- Hit us up on Twitter

- View all our projects GitHub

This blog has been republished by AIIA. To view the original article, please click HERE.

Recent Comments