Why feature engineering and feature management is as important to your ML project as the algorithm you choose

There are endless articles and tutorials on topics in Data Science and ML, from Scikit-learn to Keras to PyTorch. In just a few hours, you can create a GAN to generate images of what you’d look like as a cartoon character, or build an LSTM to create text messages as if they came from you. These are powerful technologies with amazing use cases. But there’s a key point that so many of these tutorials miss: the power of ML doesn’t come from the models, it comes from the data, and more specifically, the features.

“Coming up with features is difficult, time-consuming, requires expert knowledge. ‘Applied machine learning’ is basically feature engineering”. ~ Andrew Ng

Why Aren’t Features More…Featured?

You’ll find the same oversight in resources available across the ML space. For example, Coursera’s Introduction to Machine Learning Course has ten sections, but only two of them are about data engineering, and the topics covered are mathematical concepts around data structuring (outlier removal and feature scaling), not feature creation. MIT’s incredibly popular 6.S191 course (which I personally recommend) is a great in-depth look at the Deep Learning space, but features exactly zero lessons on data management or engineering in its 10 courses. Andrew Ng’s famous Stanford Machine Learning course, which is one of the best ML courses available, dedicates just a handful of its 112 available lectures to the importance of data. In one such video regarding anomaly detection, Andrew states, “It turns out […] that one of the things that has a huge effect on how well [a model] does is what features you use.”

As an ML community, we have focused our technological efforts similarly, building incredible tools for modeling while neglecting the data that feeds them. We’ve nearly automated the process of building classical ML models: iterating through thousands of Sklearn algorithms takes just a few lines of code, and tracking that information in a clean way requires just a few more. Keras has massively simplified and democratized the deep learning framework, enabling even beginners to rapidly progress. But all of these models, frameworks, and demos assume a crucial piece of information: your data is already preprocessed and ready to go in a CSV file.

No Data Science Without Data

For learning, this can be a great asset, and removes much of the friction to getting started. But in practice, this is an assumption that is nearly never true. I often work with new college grads on real-world data science projects, and the most common feedback I hear is, “I cannot believe how much of our time was spent on preprocessing the data. I never learned that in school.” This leads me to two points that universities and online courses need to consider in forthcoming curriculums:

- Data management is the majority of the work of data science.

- Data Scientists need to learn how to manage data and build valuable features for their models.

Nirek Sharma, a leading Data Scientist at Splice Machine, told me, “Data management is critically important and often under-appreciated. Without close attention to detail during the engineering and pre-processing stages, the modeling stages will be handicapped.”

When asked about his personal experience, Nirek said that he spends about 70% of his time on data engineering, and 30% on modeling for projects with clients. Even when building demo models on “perfect” data, he spends as much as 60% of his time feature engineering.

Feature engineering is not simply a mundane task that needs to be done. It has an outsized impact on the quality of your model. Time and time again I’ve seen projects featuring a linear regression on meticulously engineered features perform better than throwing a Keras model at the problem with simple preprocessing.

I’d like to be clear: the advancements made in Machine Learning, particularly in deep learning, are fantastic and have incredible use cases for good. It is simply not the entire solution to putting these advances into production.

It’s Time to Reengineer Feature Engineering

So features are important. Can’t we automate that too? There are some awesome open source automated feature engineering projects, even some that work with big data frameworks like Spark and Dask. Well, maybe. There’s quite a bit of debate whether you can fully automate feature engineering. Domain expertise is real and difficult to replicate, but beyond that, targeted time-based features can add a lot of signal to your models and can be tough to find in a sea of raw data.

For the purposes of this article, though, let’s say that yes, you can fully automate the process of feature extraction and engineering. That still isn’t the whole story, because now you have a static set of features based on the data right now. Data doesn’t stay the same, and you can’t assume that it does when building models. If you build a model with a specific set of hyperparameters on a set of data, and then rebuild that model with identical hyperparameters on a slightly different set of data the next day, you now have a different model.

What happens when you get more data? What happens when data changes? What about when you find another dataset that you can join with the current one to drive even more signal?

How do you maintain lineage, governance, and reproducibility across your models, as it relates to the data?

What is needed here is a set of DevOps-like tools to manage and automate the tracking of data for ML models the same way software engineers use GitHub to automate the tracking of code for applications. Without that, you’ve built a one-time analysis, not a production machine learning model.

Enter the Feature Store

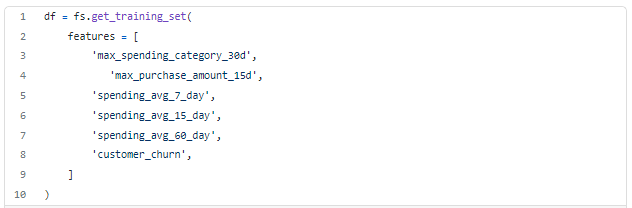

It would be irresponsible for me to leave this article without a proposed solution. The Feature Store is a mechanism that adds governance, lineage, and structure to your data engineering workflow. It gives Data Engineers a clear (and ideally flexible) workflow for creating and documenting features, and enables data scientists to reuse those features to create training datasets that are kept up to date along with pipelines to modify new data in the system. The Feature Store adds an API layer around the data (potentially through a DataFrame or SQL construct) that makes getting a training dataset for a model as simple as…

There are a number of benefits to the Feature Store, like feature sharing, access control, real-time feature serving, and many others, some of which I cover in my article here. Another great resource in the Feature Store space is featurestore.org, which shows currently available Feature Stores and some important differences between them.

At the end of the day, the power of any given machine learning model depends on the strength of the model and the quality of the data used to build it. As a field, we need to spend more time emphasizing the importance of data and the features that inform ML models in the first place.

This blog has been republished by AIIA. To view the original article, please click HERE.

Recent Comments