A combination of “deep learning” and “fake media,” a deepfake is manipulated video content that is nearly indistinguishable from authentic video. Deepfake creators often target celebrities, world leaders, and politicians, manipulating the video to portray the subject saying something they did not.

You may have seen one of the more famous deepfakes, like Jordan Peele’s version of Barack Obama, Britain’s Channel 4 video of the Queen of England’s holiday speech, or perhaps even Salvador Dalí introducing his art and taking selfies with visitors.

As Dalí proves, deepfakes can be used for good. They have brought history to life, restored voices of ALS patients and aided in crime scene forensics. However, nefarious actors are capitalizing on the dark side of deepfakes, using the same techniques to disseminate pornography, conduct misinformation campaigns, commit securities fraud, and obstruct justice.

The big picture problem with the proliferation of rogue deepfakes is that they propel us toward a “zero-trust society” in which it’s increasingly difficult to distinguish reality from fabrication. The existence of deepfakes alone makes room for tremendous doubt about the authenticity of legitimate video evidence. As Professor Lilian Edwards of Newcastle University points out, “The problem may not be so much the faked reality as the fact that real reality becomes plausibly deniable.”

Mitigating deepfakes

As our newsfeeds are flooded with high quality deepfakes, it is unrealistic to place the burden of identification and mitigation on humans alone. In 2019, Microsoft, Facebook, and Amazon launched the Deepfake Detection Challenge to motivate development of automated tools for identifying counterfeit content. Research in academia has accelerated, with a focus on detecting forgeries, and the Defense Advanced Research Projects Agency (DARPA) funded a media forensics project to address the issue.

Fear of interference in the recent US election also inspired a flurry of US regulatory activity. In December 2020, the IOGAN Act directed the National Science Foundation (NSF) and the National Institute of Standards and Technology (NIST) to research deepfakes. The National Defense Authorization (NDAA) kicked off 2021 with the creation of the Deepfake Working Group, tasked with reporting on the “intelligence, defense, and military implications of deepfake videos and related technologies.”

“The government isn’t ready. We don’t have the technologies yet to be able to detect more sophisticated fakes.” House Intelligence Committee chair Adam Schiff, July 2020 interview on Recode Decode.

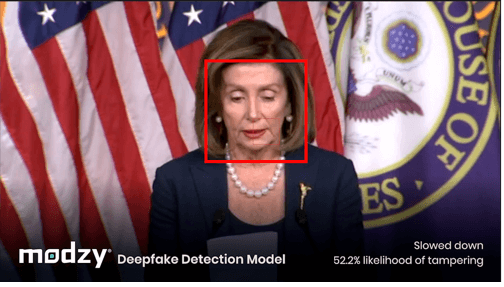

Modzy’s Deepfake Detection Model

Modzy® recently added a deepfake detection model to our marketplace. The algorithm is a combination of models applied in series. Overall, the model has attained a precision of .777, a recall of .833 and F1 score of .804 when run against the Challenge Dataset. We ran the model on a half dozen of the most controversial recent videos, with impressive results.

Original Source: https://www.youtube.com/watch?v=cQ54GDm1eL0)

(Original source: Pelosi: GAO confirms White House ‘broke the law’)

(Original Source: https://www.youtube.com/watch?v=IvY-Abd2FfM)

(Original Source: https://www.kaggle.com/c/16880/datadownload/dfdc_train_part_49.zip)

As evidenced above, the model performed very well, recognizing authentic video from generated or altered video in every case. Unfortunately, deepfake technology is a cat and mouse game. For every new automatic deepfake detection model, there is a simultaneous effort to develop deepfake technologies that can elude detection tools. Additionally, deepfake tools are increasingly accessible. Novices can now generate convincing video with access to a few hours of compute time. This ease of access has resulted in a deluge of malicious deepfakes that inflict emotional, political, and reputational damage. The answer to meet this challenge, is improving AI-powered deepfake detection capabilities. Over time, we’ll likely rely on more sophisticated AI-powered deepfake detection systems to automatically detect and flag altered video to prevent the spread of misinformation. As these algorithms and approaches improve, our data science team will continue to develop even more robust models to meet the need for more trustworthy detection solutions.

This blog has been republished by AIIA. To view the original article, please click HERE.

Recent Comments