If you’re using machine learning to scale your business, do you also have a plan for Model Governance to protect against ethical, legal, and regulatory risks? When not addressed, these issues can lead to financial loss, lack of trust, negative publicity, and regulatory action.

In recent years, it’s become easier to deploy AI systems to production. Emerging solutions in the ModelOps space, similar to DevOps, can help with model development, versioning, and CI/CD integration. But a canonical approach to risk management for AI models, also called Model Governance, has yet to emerge and become standard across industries.

With an increasing number of regulations on the horizon, in 2022, many companies are looking for a Model Governance process that works for their organization. In this article, we first discuss the origins of Model Governance in the financial industry and what we can learn from the difficulties banks have encountered with their processes. Then, we present a new 5-step strategy to get ahead of the risks and put your organization in a position to benefit from AI for years to come.

Why do we need Model Governance?

It’s natural to wonder why we need a new form of governance for models. After all, models are a type of software, and the tech industry already has standard processes around managing risk for the software that powers our lives every day.

However, models are different from conventional software in two important ways.

1) Data drift. Models are built on data that changes over time, causing their quality to decay over time — in silent, unexpected ways. Data scientists use a term called data drift to describe how a process or behavior can change, or drift, as time passes. There are three kinds of data drift to be aware of: concept drift, label drift, and feature drift.

2) Unlike conventional code, where inputs can be followed logically to their outputs, models are a black box. Even an expert data scientist will find it difficult to understand how and why a modern ML model is arriving at a particular prediction.

What we can learn from Model Governance in the finance industry

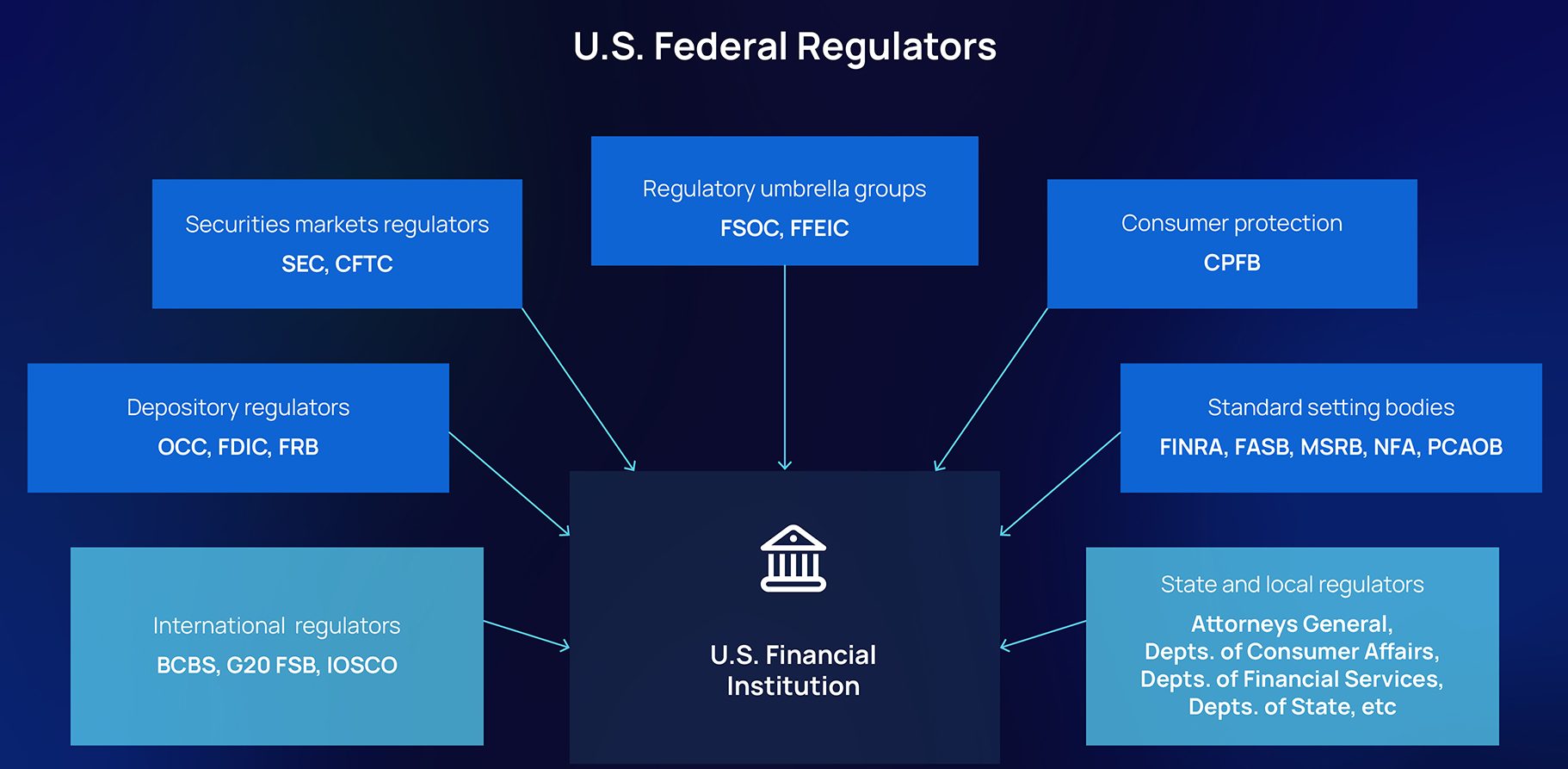

The origins of Model Governance can be traced to the banking industry and the 2008 financial crisis. As a result of that crisis, US banks were required to comply with the SR–117 regulation and its OCC attachment for model risk management (MRM) — regulations that aim to ensure banking organizations are aware of the adverse consequences (including financial loss) of decisions based on models, and have an active plan to manage these risks. A typical bank may be running hundreds or thousands of models, and a single model failure can cause a loss of billions of dollars.

Several decades ago, the vast majority of those models were quantitative or statistical. But today, AI models are essential to banking operations. As one example, banks are expected to rely on models — not just their executives’ gut instinct and experience — when making decisions about deploying capital in support of lending and customer management strategies. Stakeholders, including shareholders, board members, and regulators, want to know how the models are making business decisions, how robust they are, the degree to which the business understands these models, and how risks are being managed.

Struggling to meet the requirements of AI

The traditional Model Governance processes designed for statistical models at banks consisted of a series of human reviews across the development, implementation, and deployment stages.

However, this process has struggled to scale and evolve to meet the challenges of using AI models. Here are some of the difficulties described in a recent research paper on Model Governance at financial institutions:

- An increasing number of models under management puts pressure on ML teams. Many teams still use basic spreadsheets to track model inventory.

- As the complexity of models increases, more time is needed for validation. It can take as much as 6–12 months to validate a sufficiently complex AI model.

- Lack of explainability tools mean modeling teams can’t make use of advanced techniques like deep neural networks, despite showing promising ROI, because they won’t pass muster with the Model Governance team.

- OCC audits are uncovering models that should not be running in production. Current governance practices translate to high compliance costs — in a given year, the US financial industry spends about $80 billion for model compliance. And between 2008–2016, US financial institutions paid close to $320 billion in regulatory fines.

- Most teams do intermittent monitoring of their models in an ad-hoc manner. Intermittent monitoring fails to identify critical changes in the environment, data drift, or data quality issues.

- Issues don’t get detected or rectified promptly due to a lack of run-time monitoring and mitigation. They only get caught during model retraining or regulatory inquiries, by which time the institution is already at risk of business loss, reputational damage, and regulatory fines.

- Regulatory complexity and uncertainty make governance increasingly difficult. For US credit models alone, one has to make sure models are adhering to regulations like the Fair Housing Act, Consumer Credit Protection Act, Fair Credit Reporting Act, Equal Credit Opportunity Act, Fair and Accurate Credit Transactions Act. It’s also possible for an AI model to be deployed in multiple territories where one jurisdiction has more conservative guidelines.

- A growing number of metrics are being proposed to quantify model bias. Adding more metrics to an already non-scalable, manual model monitoring process means more overhead.

The new 5-step approach to Model Governance

These risks and the variety of AI applications and development processes call for a new Model Governance framework that is simple, flexible, and actionable. A streamlined Model Governance solution is a 5-step workflow.

1. Map — Record all AI models & training data to map and manage the model inventory in one place.

In addition to setting up an inventory, you should be able to create configurable risk policies and regulatory guidelines. Doing this at the level of the model type will help you track models through their lifecycle and set up flexible approval criteria, so Governance teams can ensure regulatory oversight of all the models getting deployed and maintained.

2. Validate — Conduct an automated assessment of feature quality, bias, and fairness checks to ensure compliance.

You should be able to perform explainability analysis for troubleshooting models, as well as to answer regulatory and customer inquiries. You should also be able to perform fairness analysis, that can help look at intersections of protected classes across metrics like disparate impact or demographic parity.

3. Approve — Ensure human approval of models prior to launch to production and capture the model documentation and reports in one place.

There should be reusable templates to generate automatic reports and documentation. You should have the ability to integrate custom libraries explaining models and/or fairness metrics, and you should be able to customize and configure reports specifying the inputs and outputs that were assessed.

4. Monitor — Continuously stress test and performance test the models and set up alerts upon identifying outliers, data drift, and bias.

You should be able to continuously report on all models and datasets, both pre- and post-deployment. You should have the capability to monitor input streams for data drift, population stability, and feature quality metrics. Data quality monitoring is also essential to capture missing values, range violations, and unexpected inputs.

Continuous model monitoring provides the opportunity to collect vast amounts of runtime behavioral data, which can be used to be able to identify weaknesses, failure patterns, and risky scenarios. These tests can help reassure Governance teams by demonstrating the model’s performance across a wide variety of scenarios.

5. Improve: Derive actionable insights and iterate on your model as your customers, business, and regulations change.

Going hand-in-hand with monitoring, you should be able to quickly act to correct the behavior of production models. This should include setting up scenario-based mitigation, based on pre-deployment testing of the model on historical data, or known situations (like payment activity peaking during holidays). You should also be able to configure system-level remediation through the use of alternative models, such as using shadow models for certain population segments if the primary model shows detectable bias during monitoring.

Existing and upcoming regulations mean Model Governance is valuable in every industry

In the past year, we’ve seen progress on AI regulations, from the European Commission’s proposal, to the NIST publishing principles on Explainable AI, to the US Office of Science and Technology’s bill of rights for an AI-powered world. Local governments are often faster to move on new regulations to protect citizens, and New York City law now requires bias audits of AI hiring tools, to be enforced starting January 2023.

With respect to AI, GDPR contains EU provisions and regulations for personal data protection and privacy rights. And just recently introduced is the U.S. Algorithmic Accountability Act of 2022 to add transparency and oversight of software, algorithms, and other automated systems,

Below is a summary of what the Algorithm Accountability Act aims to accomplish:

- Provide a baseline requirement that companies assess the impacts of automating critical decision-making, including decision processes that have already been automated.

- Require the Federal Trade Commission (FTC) to create regulations providing structured guidelines for assessment and reporting.

- Ensure the responsibility for assessing impact by both companies that make critical decisions and those that build the technology that enables these processes.

- Require reporting of select impact-assessment documentation to the FTC.

- Require the FTC to publish an annual anonymized aggregate report on trends and to establish a repository of information where consumers and advocates can review which critical decisions have been automated by companies along with information such as data sources, high level metrics and how to contest decisions, where applicable.

- Add resources to the FTC to hire 50 staff and establishes a Bureau of Technology to enforce this Act and support the Commission in the technological aspects of its functions.

In alignment with these concepts below is a blueprint for ML model governance we’re building at Fiddler to help enterprises build trustworthy AI.

Could this 5-step Model Governance solution work for your team? If you’d like to explore what this could look like, watch our platform in action. Fiddler has helped countless large organizations achieve a Model Governance process to scale their AI initiatives while avoiding risk.

This blog has been republished by AIIA. To view the original article, please click HERE.

Recent Comments