With every generation of computing comes a dominant new software or hardware stack that sweeps away the competition and catapults a fledgling technology into the mainstream.

I call it the Canonical Stack (CS).

Think the WinTel dynasty in the 80s and 90s, with Microsoft on 95% of all PCs with “Intel inside.” Think LAMP and MEAN stack. Think Amazon’s S3 becoming a near universal API for storage. Think of Kubernetes and Docker for cloud orchestration.

The stack emerges from the noise of tens of thousands of other solutions, as organizations look to solve the same super challenging problems. In the beginning of any complex system, the problems are legion. Stalled progress on one blocked progress on dozens of others. But as people solve one problem completely, it unlocks the door to a massive number of new solutions.

In the early days of the Internet, engineers worked to solve thousands of novel problems all at the same time, with each solution building on the last. Once someone invents SSL, you can do encrypted transfers of information. Once you have the Netscape browser that can do SSL you can now start working on e-commerce. Each solution unlocks a new piece of the puzzle that lets people build more and more complex applications.

As more and more pieces of the stack come together the “network effect” kicks in. Each node that comes online makes the network more and more valuable. Suddenly, when you’ve added enough people you hit a “tipping point” and adoption accelerates rapidly up an exponential S curve. Once it accelerates fast enough you hit critical mass and adoption becomes unstoppable.

When a CS forms it lets developers move “up the stack” to solve more interesting problems. Over the last few decades we’ve seen traditional software development reach dizzying new heights as better and better stacks emerged. It once took a small army of developers to write a database with an ugly interface that could serve a few thousand corporate users in the 1980s and 1990s.

It took only 35 engineers to reach 450 million users with WhatsApp.

That’s the network effect in full effect, where any team can leverage cutting edge IDEs, APIs, and libraries from dozens of other teams to deliver innovation at a breakneck pace.

We can track the formation of a CS with the famous Technology Adoption Curve.

In his 1962 book Diffusion of Innovation, sociologist Everett Rogers showed us that people and enterprises fall into five distinct groups when it comes to taking on new tech. Geoffrey Moore built on the ideas in his business best-seller Crossing the Chasm.

Think of the smartphone. I ran out and got the first Apple iPhone, which put me in the Early Adopter phase. I benefited because I managed to keep the same unlimited cell contract for fifteen years, long after every cell phone company phased them out. But I also suffered because the early phone didn’t have nearly as many apps as we have today because the network effect hadn’t kicked in. Eventually, the Early Majority signed on to the smartphone revolution and it wasn’t long before we had mega-apps like Instagram, AirBnB, WhatsApp, and Uber that leveraged the smartphone canonical stack of OS, GPS and Internet in your pocket. Now the smartphone is ubiquitous and almost everyone has one, even in the farthest reaches of the world.

If you know anyone still using a flip phone, they’re part of the Laggards.

Now something new is happening in computing. The very nature of software is changing. We’ve gotten so good at building hand-coded software, that we’ve reached the upper limits of what it can do.

Up until recently, coders crafted all the logic of their software by hand. That works amazingly well for designing interfaces to spreadsheets but not so good for recognizing cats in pictures or recognizing your voice on your phone. That’s why in the last decade a new kind of software has emerged.

Machine learning.

Instead of hand coding rules, we train machines and they learn the rules on their own.

We need machine learning to solve previously unsolvable problems, like cars that drive themselves, detecting fraud, recognizing faces, flying drones, understanding natural language and more. And while machine learning shares a lot in common with traditional software development, it’s also radically different and it demands a different Canonical Stack.

The Machine Learning Stack

The ML development cycle has multiple unique steps, like training and hyperparameter optimization, that simply don’t match the way traditional software development works.

Researchers and big tech companies developed many of the best algorithms, as well as the tools they needed to train those algos into models and deploy them into production. As big tech solves the problems of creating, training and serving models in production apps, more and more of those tools make their way into open source projects and that drives the AI revolution farther and faster. As more teams get their hands on those tools we’re finally starting to see the “democratization of AI.”

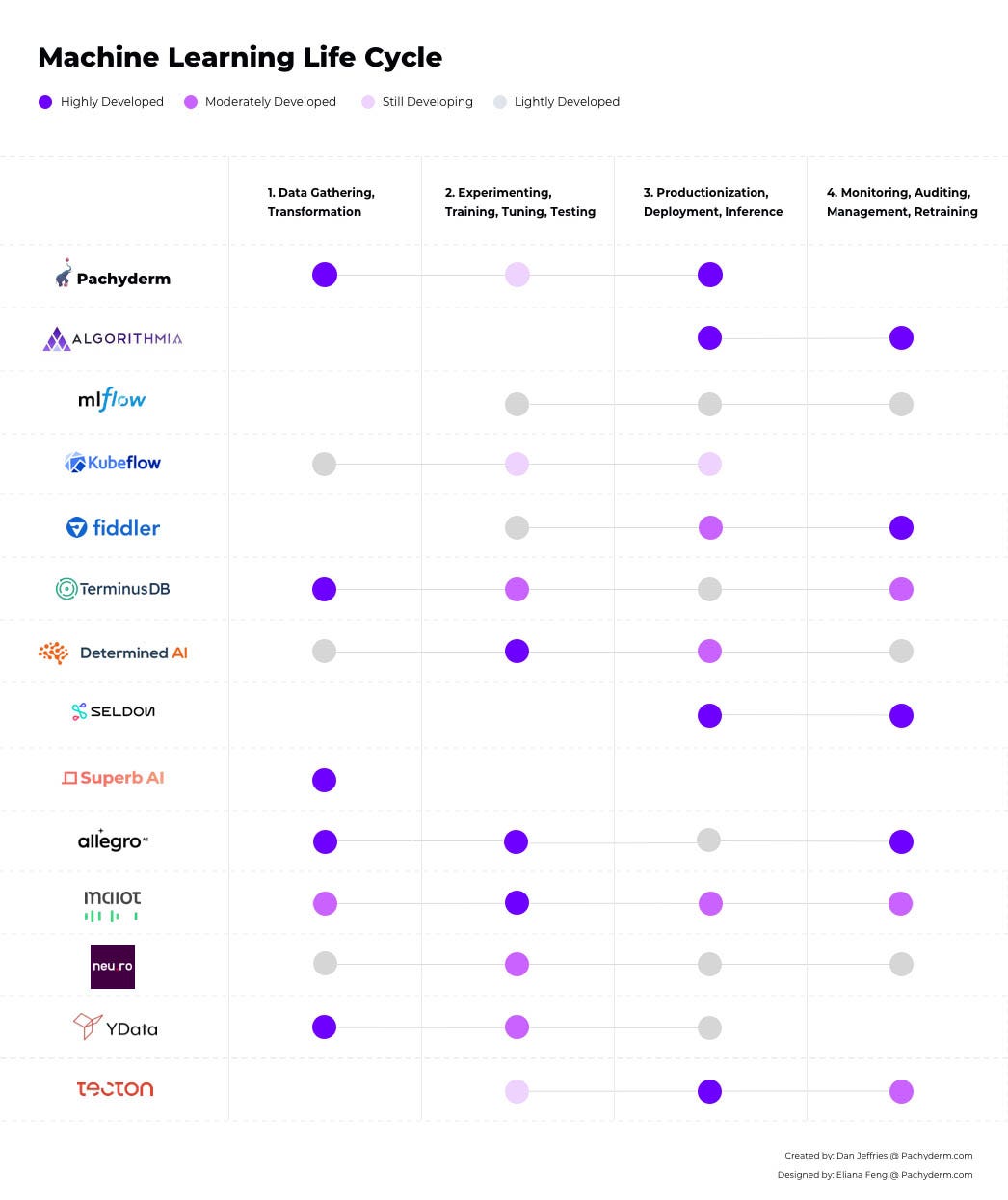

As the dust settles we can now see the early outlines of the Machine Learning Canonical Stack (MLCS) forming. We can also start to clearly see the major stages of the Machine Learning Lifecycle (MLL), the steps it takes to make a cutting edge model in the real world. Too often companies couldn’t even see the stages. That’s why you see articles with a NASCAR slide of logos with 45 categories of machine learning.

There won’t be 45 categories of machine learning.

There will be three or four major ones as the technology continues to develop into the powerhouse technology of the next decade.

The Machine Learning Lifecycle

The MLL has four key stages:

- Data Gathering and Transformation

- Experimenting, Training, Tuning and Testing

- Productionization, Deployment, and Inference

- Monitoring, Auditing, Management, and Retraining

Way too much of this process is still incredibly manual. Everywhere we still see data scientists writing web scrapers and glue scripts, copying data out of databases, cobbling it all together, moving it from place to place.

That’s changing fast.

We’re already seeing the early contenders for the crown for each stage in the ML development cycle emerging from the chaos of competition.

Data Gathering and Transformation

As teams try to wrangle their data, we’re seeing pioneers like Pachyderm deliver data version control at scale with an immutable, copy-on-write file system that fronts object stores like Google Cloud Store (GCS), Amazon S3, Azure Blob Storage and Minio.

Data version control is something unique to the AI/ML pipeline and one of the problems that every data science team eventually hits as they try to scale their team to deliver models into production fast.

TerminusDB does distributed revision control differently than Pachyderm, delivering a scalable graph database that allows Git like version control. While Pachy focuses on any kind of file you can put into object store, Terminus works best with data that fits well into a database.

Once companies have that data, it’s SaaS services like Superb AI help data scientists quickly label vast swaths of data without having to do it all manually, a crucial component for getting data to data hungry neural nets. YData also helps data science teams rapidly label, clean and build data sets and even generate synthetic datasets to augment data for the training, tuning and testing phase.

Experimenting, Training, Tuning and Testing

After the data is all cleaned and labeled, it’s platforms like the maiot core engine or Allegro AI with their Trains framework that form a smooth experimentation and training pipeline.

Open source libraries like TFX and Horovod are emerging as the standard for rapidly scaling training across GPUs and TPUs but those libraries will likely get baked into more advanced training, data and visualization systems like Determined AI. Determined AI leverages Horovod to rapidly slice up and scale training jobs across GPUs, while also doing hyperparameter tuning and testing lots of different algorithm variations against each other in full scale Darwinian war. That’s no small feet because parallelizing an algorithm to run across multiple GPUs was a manual process only a few years ago. We’re also seeing libraries like Hyperopt used for rapid hyperparameter tuning and search.

If those AI architectures are neural nets, that team will almost certainly design that net in one of the two essential platforms, Pytorch, backed by Facebook, or Tensorflow, backed by Google. Those powerhouse platforms have created a two horse race, where many other frameworks once played, like MXnet and Chainer.

Of course, not every team develops algorithms and models from scratch. Many teams may never need to go beyond the state of the art. They can grab a top of the line model, train it with transfer learning to adapt their model to their specific dataset and deliver incredible performance on a known problem like product recommendation or automatic image tagging or sentiment analysis.

Expect to see the rise of more and more fully trained systems embedded in applications everywhere. We’ve already seen the emergence of fully trained Transformer models with libraries like Hugging Face’s Transformer library and they won’t be the last.

We’re seeing lots of companies and organizations try to become the “hub” for pretrained models, like Pytorch Hub and Tensorflow Hub. Companies like Algorithmia want to deliver the SaaS API front-end to pre-trained models, rather than letting companies download their own version of a model and they have a good shot at becoming the GitHub for trained model access and running those processor intensive models at scale. Someone will develop the model repository that everyone standardizes on in the coming years, perhaps something like ModelHub, but right now it’s anyone’s game.

Of course, all these libraries, hyperparameter searches, and training runs need an engine to power them and that’s where pipeline platforms come into play.

Productionization, Deployment, and Inference

Pipeline platforms form the bedrock of the Canonical Stack for ML.

Pipeline engines will likely absorb many of the other pieces of the AI/ML puzzle to form a complete, end-to-end system for selecting, training, testing, deploying and serving AI models.

There are three major contenders for the pipeline crown:

- Kubeflow

- MLFlow

- Pachyderm

Pachyderm is the most straightforward and simple of the pipeline systems. It uses well defined JSON or YAML definitions to call containers to transform, train and track models as they move through the ML lifecycle. It’s data driven so it can use state about the data to drive pipelines forward every time that data changes. It’s pipeline system can also be swapped out to provide data lineage to other pipeline systems, like Kubeflow or MLFlow, with ease.

Kubeflow is backed by Google and others. It’s developing into a robust ecosystem that covers a lot of ground. Primarily it functions as an orchestration engine, leveraging the power of Kubernetes to quickly scale containers but it’s still a sprawling project with a lot of cooks in the kitchen and it’s in danger of becoming the OpenStack of machine learning, something that started off as a great idea but never reaches wide adoption because of its complexity.

The last big contender is MLFlow, backed by Databricks, a company that specializes in traditional statistical analytics with Spark. MLFlow has a lot of backing from big companies contributing to its codebase. Whereas Kubeflow came from a philosophy of integrating lots of different disparate components into a unified architecture, MLFLow delivers a clean, well defined, purpose driven and simple design since it came from a single company’s desires to craft an end-to-end pipeline.

Many companies and organizations use AirFlow for the AI/ML pipelines but it wasn’t purpose built for AI. It was created by AirBnB to manage complex workflows in any kind of software. It has a large ecosystem and installed user base but it’s main shortcoming is that it wasn’t built for AI workflows specifically and it’s 100% Python focused and doesn’t allow any easy way to plug in other languages. The likely winner in the long run will have a pure AI/ML focus and it won’t be bound to a single language or framework.

What you won’t see is something like Amazon’s SageMaker in the hunt for the industry standard pipeline engine. Products like SageMaker will always make money and companies that are all in on Amazon will pay them for the privilege, but SageMaker is hopelessly locked to a single cloud. That means it won’t ever become a part of the CS unless Amazon decides to open it up to other clouds.

On the other hand we have seen good adoption of closed source pipeline systems like Algorithmia’s MLOps stack because it’s not locked in and can run anywhere, unlike its Amazon rival. We’re also seeing pipelines that integrate well with lots of other stacks, like Neu.ro, start to gain real traction because they fit together like Lego bricks with other solutions. Lastly, Allegro AI’s enterprise offering delivers in multiple categories of the pipeline and it’s gaining a lot of traction, as is Modzy for the defense space.

It’s too early to pick a winner in the race to pipeline supremacy but the race is heating up now. Venture capital is pouring in because if you create the infrastructure that powers machine learning in the future you’re useful to every single company and project out there.

All those companies and projects help data scientists rapidly create a working model. Now we’ve got to deploy it into production so it can get to work. That’s where model serving, monitoring and explainability frameworks come onto the scene.

Seldon Deploy has swiftly become one of the top model serving systems for ML on the planet. Algorithmia’s enterprise infrastructure stack also plays in this space and does strong model serving at scale. They compete with Algorithmia and their enterprise ML stack for productionizing and deploying models fast.

Deployment systems run A/B and canary testing to verify the model really does what you expect after it leaves development and goes to production. That lets you do tests with a small subset of users to make sure it works. It also sunsets the old models but keeps them archived for quick redeployment in case anything goes wrong.

Monitoring, Auditing, Management and Retraining

Finally, we have a live production AI app. It’s working in the real world and serving customers. Now we need to keep tabs on it in this stage of the AI/ML flow.

We have a number of frameworks that have incorporated Machine Learning into their monitoring applications, like Splunk, but we have almost no applications that monitor the AIs themselves. More and more log analysis tools and real time monitoring frameworks will roll ML into the mix in the coming years. They’ll do anomaly detection and smart, automated incident management and solutions, fixing problems before any human has to touch the system or suggesting possible solution paths to human engineers, like Red Hat Insights.

But we’ll need monitoring of the AIs themselves too.

Production AI teams need to know if the app is still performing well? Did a major black swan event like COVID wreck your supply chain management model like it did to so many production AI models? Is it drifting? Does the machine log every decision it makes to a distributed database for later auditing and forensics?

Legislation that’s coming down the pipe from governments everywhere will demand more and more monitoring of these applications. They’ll want explanations when things go wrong and so will you and your business and the legal system. Uber faced intense investigation when its self driving car tests killed a woman in Arizona and the safety driver just got charged with manslaughter so the stakes to monitor and understand every aspect of your AI are very, very high. Companies will need them in place before they destroy a life or the bottom line.

The Seldon Alibi and the Fiddler framework can help deliver that explainability. That’s where we interrogate a model to understand why it made decisions. It helps us peel back the black box. We might have a convolutional neural net tell us what pixels it focused on to tell us the picture contained a dog, in the event that it starts labeling things wrong. Fiddler’s framework goes the furthest of the two, by combining monitoring and explainability into a single stack.

We’ll also see a massive rise in direct attacks on AI systems. Even if you manage to protect those systems as they roll to production, they are still subject to new kinds of attacks that exploit the gaps in their logic and reasoning. That’s something no security team on the planet has had to deal with in the past. Bugs and exploits can bring down production IT systems, but it’s entirely possible to have a “bug free” AI that still makes mistakes that attacks can exploit.

Facebook built an AI Red Team to stop attacks on Instagram when clever users used tiny, imperceptible patterns in the images to fool nudity detectors. I wrote about building AI Red Teams to stop problems before they start only a few months ago and now they’re reality and companies everywhere are racing to build them.

Seldon’s Alibi Detect works to discover adversarial attacks on neural networks and they’ll join the toolkit of any Red Team.

The New Stack Now

It takes time for a Canonical Stack to develop in any new field. Before it develops and unleashes a tidal wave of new innovation, it’s often super hard for anyone to see where it’s all going. Most people can’t see the future easily. They can only see what’s happening right now. When there’s no solution to a current problem they imagine we’ll never have a solution.

But there’s always a solution, given enough time and interest and investment.

AI will touch and transform every single industry and country on Earth with more impact than the Internet itself.

As we solve one problem, it opens up new possibilities. Eventually when you solve enough problems you have a network effect that catapults a new technology into the mainstream. AI is already starting to feel the power of the network effect and progress is accelerating faster and faster.

We’ll soon have intelligence woven into every app, every device, every vehicle and product on the planet.

With a massive amount of investment, real solutions and real, cutting-edge products developing, it’s a perfect storm that will democratize AI and deliver it to the whole world.

And it’s the Canonical Stack that will make it reality.

###########################################

I’m an author, engineer, pro-blogger, podcaster, public speaker, and the Chief Technical Evangelist at Pachyderm, a Y Combinator backed MLops startup in San Francisco. I also run the Practical AI Ethics Alliance and the AI Infrastructure Alliance, two open communities helping bring the canonical stack into reality and making sure AI works for all of us.

###########################################

This article includes affiliate links to Amazon.

###########################################

Recent Comments