Modzy is an Artificial Intelligence (AI) platform designed to bridge the gap over what we call the “AI Valley of Death”, or the often difficult process of getting an AI or machine learning (ML) model out of the lab and into production in a secure and scalable way. Based on our expertise in building an API-driven we’re sharing lessons learned that you might find useful when developing your own API, especially for new and emerging technologies.

One of the first things that we needed to take into account about AI is that there is more than one stakeholder, or role, that we needed to consider when building our APIs.

First, there are data scientists, who are experts in math and algorithm development, but may only have a small amount of programming experience. It is unlikely that are experienced with cloud architecture, distributed systems, networking, and security, all of which are important in delivering a secure, scalable model into production. They may also be unsure how much CPU, RAM, and other hardware to allocate to their models which is important for being cost-effective, especially when running at scale.

Second, you have application developers who have a lot of programming experience, but for whom the models might as well be magic. Many models require their inputs to follow a very specific format for the inference to work optimally. This could include things like stripping color channels from an image, resizing an image to a particular pixel dimension, encoding audio using a particular codec or sample rate, etc. Without prior knowledge of what these requirements are, application developers may unwittingly provide non-optimal inputs resulting in a bad experience for the users of that model. Application developers should also not be expected to know or care what, if any, special hardware requirements any particular model might have. To them, it should be an API or function call.

Which brings us to the third role: the DevOps and infrastructure engineers who take the model built by the data scientists and put it into production so that it can be used by the application developers. These are the folks who aren’t as familiar with AI models and application development, but who are the custodians of the production hardware and are responsible for monitoring, scaling, and securing both the applications and the models in production.

The coordination between these different roles with their varying skillsets and level of comfortability with each of the technologies required to make the whole process work can be complicated, especially in large organizations. This is one reason why industry research indicates that some 50% of models built never make it into production. And even the ones that do make it into production do so up to nine months after the model is ready.

In response to this reality, a new field has been emerging called ModelOps, which aims to bring the same benefits to the AI model lifecycle that DevOps has brought to the application lifecycle.

So let’s define what ModelOps is. ModelOps is to artificial intelligence and machine learning what DevOps is to application development. And like DevOps, it has similar aims.

Automate as much as possible

First, automate as much as possible and provide self-service APIs to invoke the automation, particularly those steps that hand off between different personas. This reduces the demand on your DevOps and infrastructure teams and allows for more consistent, auditable, and rapid delivery since each team does not require the time or attention of another team to deliver their work.

Provide APIs for interacting with the automation

Second, integrate your automation with the tools and services that each team uses already. For data scientists, this means making it easy for them to “release” a model straight from their training tool of choice without needing to go through extra build and packaging steps that they may not be as familiar with such as Jenkins, Docker, etc. For application developers, this means having documentation, convenience libraries, and SDKs that take the guesswork out of how to use each model correctly.

Integrate with existing tools and services

Third, utilize, participate in, and contribute back to the open-source community that is growing around ModelOps. One of the great strengths of the DevOps community is the wealth of open-source software available to automate particularly complex or difficult parts of the process, and mixing a cocktail of these tools together can bring a level of maturity to a release process that would be difficult to achieve if you had to write everything yourself. Similarly, the ModelOps community is following in DevOps’ footsteps and every month there are new and powerful tools being made available that help to simplify or automate some of the difficult parts of releasing models into production. Two particularly exciting open-source tools include the Open Model Interface, which provides a spec for multi-platform OCI-compatible container images for ML models, and chassis.ml, which builds models directly into DevOps-ready container images for inference.

Tying all of these things together are APIs. They’re the glue that allows for the easy adoption and integration of one tool or system into another and so the better the API, the easier and more useful that tool or system will be.

What Makes a Great API?

At the end of the day, a great API is one that enables both humans and computers to easily adopt and use the functionality provided by that API. It’s important to recognize that APIs need to be great for both humans and computers. Computers will end up consuming the API at the end of the day, but it will take a human programmer to write the code to use the API so both aspects ought to be considered to make an API great.

It should be semantic

We all know that our programs are compiled down to machine code and become a bunch of ones and zeros, but unless you’re a bad TV movie hacker, you’re probably writing your program using meaningful words for your functions, variables, etc. The first quality of a great API is that it should be semantic, which means that a mildly knowledgeable person should be able to read your API and have a pretty good idea about what it does and how to use it. This means that you use language to provide hints and clues about how it works and what information a user of your API would need to use it successfully. For example, use whole words and even phrases rather than abbreviations unless the abbreviations are ubiquitous and would be known by all users of your API. Using “HTTP” as an abbreviation is probably fine since there will likely be little confusion about what you’re referring to. But even though it can be annoying to type out, it’s better to avoid abbreviations like “HP” when referring to hyper-parameters since a user of your API won’t necessarily know what a hyper-parameter is and may be confused as to why your model has “hit points”. Being more verbose is usually always best to avoid confusion.

It’s also important to use language features like grammar and syntax to provide clues about how your API functions. Use singular and plural intentionally so that variables, parameters, and response objects that are plural are some kind of collection, while ones that are singular represent individual objects. Functions should always have a verb in them and certain variables can also have verb prefixes on them to indicate what data type they are. For example, “is_disabled” or “has_users” would be examples of using language to make it clear that this variable is a boolean type. Similarly “created_at” indicates that this variable is a timestamp. Using language to help the humans writing code to adopt your AI can reduce the amount of time that user has to spend going back to your documentation by providing enough contextual information in the language itself that they’ll intuitively know what to do.

Make it intuitive

Which brings us to the second thing that makes a great API: it should be intuitive. Using language effectively goes a long way to make your API intuitive but there are other things that can be done. Make sure that your vocabulary in your API is appropriate for the audience of the API. Be cautious and intentional about any technical terms you use in your functions, variables, routes, and documentation and be sure that those technical terms are either ones that would be well known to the intended audience of your API or well documented to teach users of your API what those technical terms mean and how they relate to your API.

Be consistent

Next, be consistent, not only within your own API but with similar well-known APIs and common best practices. Try not to invent a new authentication methodology or pagination system if you can help it. Instead try to conform to industry standard or well known conventions so that’s one less thing that your users have to learn and one less thing that the convenience libraries your users may be using will need to support. Remember, the goal at the end of the day is to make your API easy to use so if a user can’t use their favorite HTTP library because it doesn’t support the custom HTTP method you’re using, then that will make your API _harder_ to use for them because now they have to craft raw HTTP requests or switch to a different library that they’re unfamiliar with.

Another aspect of making APIs more intuitive is how you handle and return error messages. Great error messages are crucial to making a great API and can be particularly difficult because they serve a dual purpose. First, they serve as communication with the end user as to what went wrong, including any information that user might need to resolve the error and try again. Second, they serve as communication with the running program, as written by an application developer, to try and automatically resolve or make decisions based on the contents of the error. So great error messages need to be both human readable and machine readable and provide enough information to allow both the human and the program to make good decisions about what to do next and how to resolve the error.

Accessibility

We’ve talked a lot about how to make APIs great for humans but we need to remember that it will be machines that will be running the API calls so it’s important to make your APIs accessible to as many machines as possible. To accomplish this, you want to identify what kinds of machines will be calling your API the same way that you define what kinds of people will be using your API. In the emerging technology space and in AI in particular, this is crucially important because AI and IoT go hand-in-hand and more and more AI processing is done on the “edge” on IoT and other similar devices. And depending on the capabilities or interfaces of those devices, they may not support newer protocols like gRPC, WebSockets and the like, but even your refridgerator and coffee machine speak HTTP these days. Regardless of whether you’re using more modern web protocols or custom protocols designed for low-latency real-time inputs, consider also supporting traditional HTTP routes as well so that your API can be easily consumed by devices that may not allow users to upload their own custom code but may support something like webhooks over HTTP.

For APIs that extend beyond simple CRUD actions, prefer declarative APIs over imperative ones

Declarative APIs

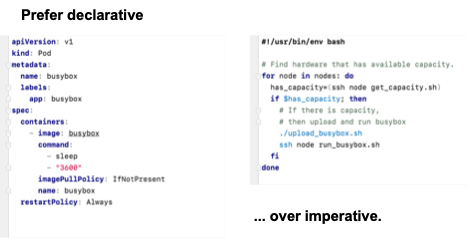

A declarative API is one the allows the user to express the outcome that they want given a set of inputs. Conversely, an imperative API is one that allows a user to have more control over the sequence of steps necessary to achieve a particular outcome, similar to if they wrote the program themself. There are places for both kinds of APIs but the advantage of a declarative API, especially for emerging technologies, is that the user of your API doesn’t have to know how the “magic” works in order to use it. Consider Figure 2 below. Before Kubernetes and container orchestrators we had Puppet and Chef scripts written out to automate our infrastructure and application deployments. It was a lot of code that required all the procedural steps and error handling necessary to have a high degree of confidence that the deployment would succeed even when things didn’t go perfectly. Consider the example on the right as a trivial example of such a script.

The introduction of Kubernetes and other container orchestrators allowed for a much simpler model that could be exposed to more and more developers because it allowed each developer to express what they wanted their end state to be and Kubernetes would handle all the difficult work of trying to make that end state a reality. This freed up DevOps and infrastructure engineers to where they no longer needed to know about how each part of the application worked in order to make sure it got deployed properly. They could leave that in the knowledgeable hands of the application developers themselves without needing to give them the keys to the kingdom.

For emerging technologies and AI in particular, this approach can yield a much better user experience for your API users. Consider a user who has a directory full of images that they want to run against a particular model. With an imperative API, that end user would likely need to know how to pre-process all the images to conform the input expectations of the model. It would need to ensure that the model is running and has the proper hardware available to it, including any special accelerator hardware like a GPU or FPGA. Maybe they would need to check to see if the model needs to download a new weights file that had been generated from a re-training loop. These are all things that the user of this API doesn’t know or care about. All they care about is getting their data processed and receiving a result back.

Declarative APIs, while much harder to write, eliminate any unnecessary knowledge or input requirementsfrom a user. It is much easier to wrap one’s brain around an API that allows one to say “I have data here, run it against version 2 of model B, go!” than to express the whole sequence of steps necessary to accomplish that outcome. As the technology becomes more ubiquitous and the knowledge of how the technology works becomes more widespread, adding imperative routes that allow a user to specifically tailor the process to their particular use case can be very powerful, but you will always have users that simply want their result and don’t care about how it happens. Declarative APIs provide a great way to account for them.

The Moving Target: Building APIs for Emerging Tech

We all know that technology moves fast. And emerging technology moves the fastest. When designing APIs around a new or emerging technology, be very thoughtful about what assumption you make as you’re building your API. Where at all possible, don’t assume that any one language, framework, protocol, or library will continue to enjoy the same position it does now. If you’ve been following the world of AI, then you’ll know that even over the last few years we’ve seen new frameworks, libraries, tools, languages, even hardware come out of nowhere and some have disappeared just as quickly after a short time.

Be careful with your assumptions

A well-designed API can weather these changes if the details of the implementation are sufficiently abstracted away from the public API contract so that as tides turn and technologies rise and fall, your API can stay consistent, leaving the complex migration process something that your internal teams may need to worry about, but your users, including any cross-team collaboration in a microservice environment, will not.

We all have to make educated guesses or assumptions. But since rewrites and major refactors are expensive and time consuming, pay thoughtful attention to your architecture and designs and be able to clearly articulate what assumptions you’re making and why, and what risk mitigation steps have been put in place in case a change does need to be made.

Don’t be too clever

Next I’d like to talk about a subject that hits close to home for me. When working with new technology, I am often tempted to “be too clever” with my architectures and designs and I have to constantly remind myself to not fall into that trap. As mentioned earlier, the end goal of your API is to make it easy to use. The more “clever” you are with your API, the more likely it is to either be harder to use because it’s different than anything anyone’s ever seen before, or it becomes harder to maintain because it’s done in a way that no one’s ever seen before. There’s always going to be an element of this with emerging technology so let the emerging technology part be the emerging part and try to not go overboard with things that look or behave differently than expected.

A simple example I like to go back to is to never underestimate the performance, reliability, and scalability of reading and returning files off a disk. In an AI application, each model will return a result and your API will probably need to store that result for later retrieval. Now at this point you might be thinking about standing up a database, or a document store, or a full-text index, or a graph database, or something similar. But you should ask yourself what the purpose of that is. How many fields in that model result are you actually going to index? Each model likely returns a different result structure appropriate to that model, which means that you can’t have a common schema that can handle everything. And even if you do index some of the fields, how does your API know what to do with that information? Standing up a database or document store means that you have one additional thing that you need to monitor, scale, and back up. And if your application is a modern cloud-native microservice application that scales horizontally, adding a relational database into the mix can create a single point of failure in your otherwise resilient architecture. A simple index with the few fields you need that points to a JSON document in an S3 bucket, for example, can be a cheap, performant, scalable, and resilient way to serve those results. It’s one less thing to have to back up and monitor, one less service to have to run and scale, and you can even offload the traffic to access those results from your application servers freeing up those connections for other connections to your API.

The moral of the story is to not let your excitement about using new technology stop you from making sound architecture decisions and make your API harder to use and harder to maintain than it needs to be.

An Emerging Tech API is still just an API

To that point, remember that your emerging technology API is still just an API. All the standard best practices still apply. It will be used by advanced users and machines as well as “legacy” users and machines. It will be used by people with deep expertise about the topic, and people who are just getting started. It will be used by people with advanced use cases and basic ones. And because of the fast moving nature of the ecosystem, you will need to keep your APIs current and effective with all the changes, advancements, and new techniques that come along.

And so let’s bring it back to where we started. Your API is going to be used by people. And more than one persona at that.

In Summary

So know your audience, both machines and people, and make your API great by using language that clearly communicates what your API does and how it can be used, use terminology appropriate for your audience, target the widest range of libraries and protocols as possible, following best practices for each so that your users will immediately recognize how to use your API based on previous experience, and make the really complicated things as simple as possible by providing a declarative way for users to indicate what outcomes they want with a technology that they may not fully understand yet.

For yourself, empower and protect yourself from rapid changes by making smart, conscious decisions about your dependencies and assumptions, and don’t over-engineer or re-invent things when a simpler or well-established solution will suffice.

This blog has been republished by AIIA. To view the original article, please click HERE.

Recent Comments