ARTICLES

Accelerate Machine Learning with a Unified Analytics Architecture

Book description Machine learning has accelerated in several industries recently, enabling companies to automate decisions and act based on predicted futures. In time, nearly all major industries will embed ML into the core of their businesses, but right now the gap...

Putting together a continuous ML stack

Due to the increased usage of ML-based products within organizations, a new CI/CD like paradigm is on the rise. On top of testing your code, building a package, and continuously deploying it, we must now incorporate CT (continuous training) that can be stochastically...

Hardware Accelerators for ML Inference

There are many different types of hardware that can accelerate ML computations - CPUs, GPUs, TPUs, FPGAs, ASICs, and more. Learn more about the different types, when to use them, and how they can be used to speed up ML inference and the performance of ML systems.This...

The Playbook to Monitor Your Model’s Performance in Production

As Machine Learning infrastructure has matured, the need for model monitoring has surged. Unfortunately this growing demand has not led to a foolproof playbook that explains to teams how to measure their model’s performance. Performance analysis of production models...

How ML Model Testing Accelerates Model Improvement – Using Test-Driven Modeling for Fire Prediction

Motivation In the United States since 2000, an average of 70,072 wildfires burned an average of seven million acres per year. This rate doubles the average annual acreage burned in the 1990s (3.3 million acres). In just 2021, nearly 6,000 structures burned, sixty...

Practical Data Centric AI in the Real World

Data-centric AI marks a dramatic shift from how we’ve done AI over the last decade. Instead of solving challenges with better algorithms, we focus on systematically engineering our data to get better and better predictions. But how does that work in the real world?...

A Data Scientist’s Guide to Identify and Resolve Data Quality Issues

Doing this early for your next project will save you weeks of effort and stress If you've worked in the AI industry with real-world data, you’d understand the pain. No matter how streamlined the data collection process is, the data we’re about to model is always...

How To: Monitoring NLP Models in Production

Today, Natural Language Processing (NLP) models are one of the most popular usages of machine learning, with a wide variety of use cases. In fact, it’s projected that by 2025, revenue from NLP models will reach up to $43 billion along with AI in general. What is...

What Are Feature Stores and Why Are They Critical for Scaling Data Science?

The field of MLOps has grown up around the reality that while the theoretical ability of machine learning to make accurate predictions and solve complex problems is incredibly sophisticated, actually operationalizing machine learning is still a major blocker for most...

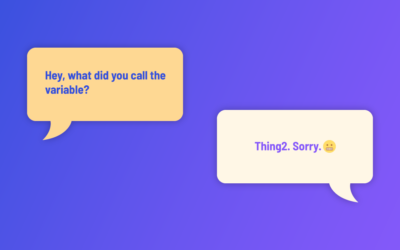

What’s in a name: URI generation and unique names for objects

Fixing linked-data pain-points by bridging RDF and RDBMS ID generation In TerminusDB we have both an automatic and manual means of describing the reference of a document in our graph. We have tried to make these as simple as possible to work with, based on our...

Connect with Us

Follow US