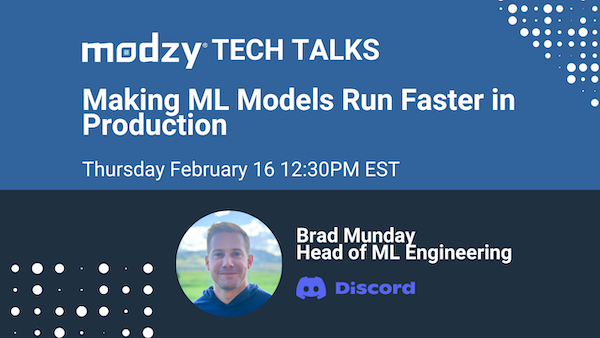

Speed and efficiency are the name of the game when it comes to production ML, but it can be difficult to optimize model performance for different environments. In this talk, we dive into techniques you can use to make your ML models run faster on any type of infrastructure. We cover batch processing and streaming, unique considerations with running models on edge devices, and practical tips for selecting the right hardware and software configurations that make ML workloads successful. We will also explore the use of model compilation and other optimization techniques to improve performance and discuss the trade-offs involved in different design choices.

This video covers four techniques you can implement to make your models run faster, including running on GPUs vs. CPUs, batch processing, split pre/post-processing & inference, and model optimization and compilation. We discuss the merits of each technique, as well as show a demo and benchmarks to demonstrate the impact of each.

This post has been republished by AIIA. To view the original content, please click HERE.

Recent Comments